LISA Data Challenge 2b: Spritz

We are glad to announce the release of several datasets in the second LISA Data Challenge, codenamed Spritz.

The purpose of this challenge is to address for the first time the realistic instrumental and environmental noise. The datasets are rather easy in the astrophysical content, moreover, the training data contain the sources that were used in the Sangria training dataset. If you are new to the Challenges, we strongly advise that you first complete the first data challenge Radler before moving to Spritz, specifically, challenges containing the merger of massive black hole binaries (MBHBs) and verification Galactic binaries.

The training Spritz data contain three sets. All three datasets were generated with the extended pipeline used in the Sangria production. All GW sources follow conventions described in the Sangria documentation and the same models (PhenomD for MBHB and Taylor-expanded model for GBs). Besides, we have varied the noise level (assuming the same acceleration and optical metrology noise in each spacecraft) within the same prior as in Sangria. Noise levels are given in the training data.

Features common to all datasets.

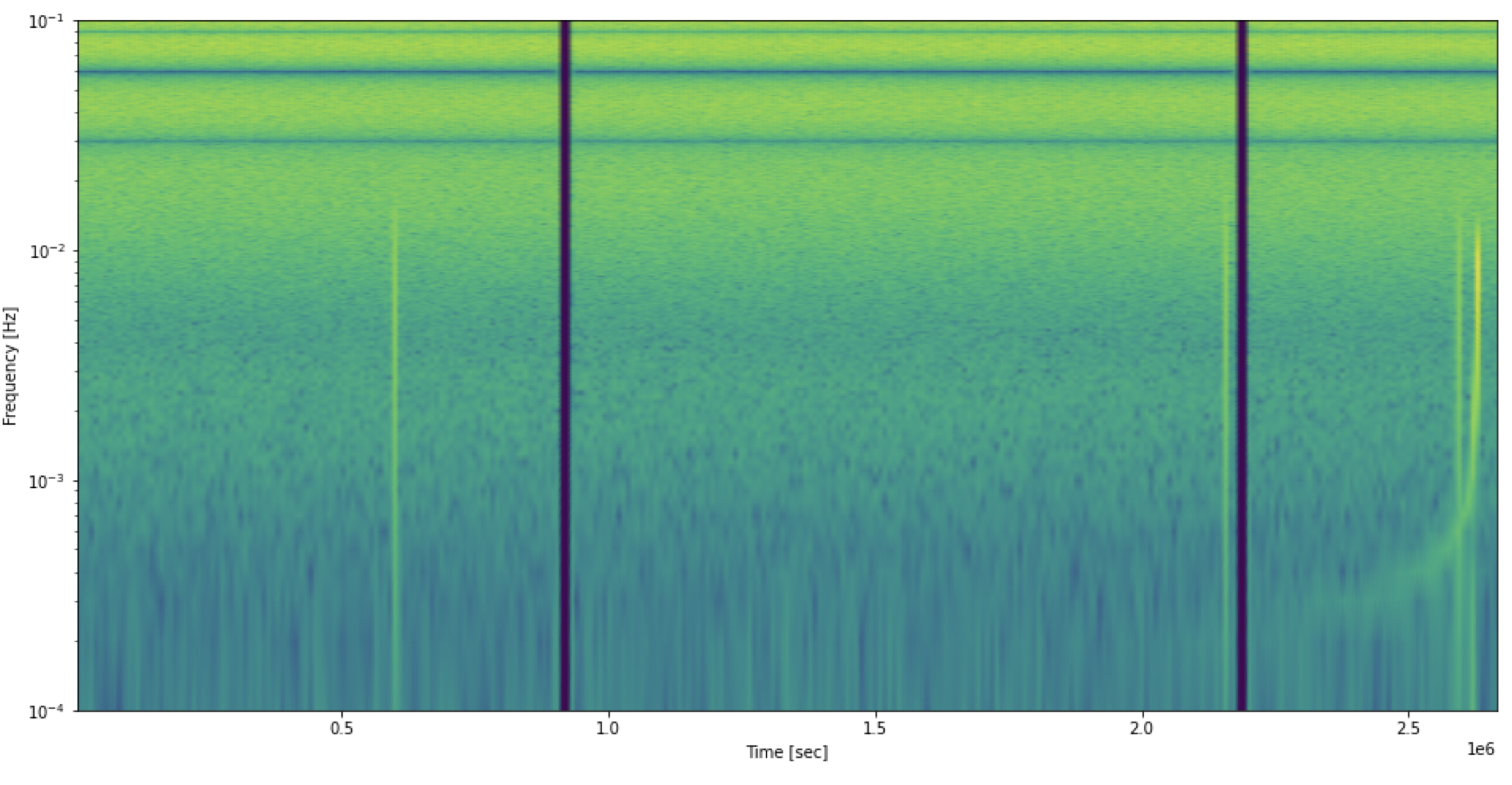

For the first time, we have used second-generation Michelson TDI X, Y, Z combinations, expressed as fractional frequency deviations, downsampled to 5-second cadence. We have used a Keplerian model for the LISA orbits. The data contain scheduled gaps of 7 hours duration each, distributed randomly with intervals between 10 and 15 days. We have included the non-stationary noise from the unresolved population of Galactic binaries (including all binaries with SNR<7 with respect to the total noise budget). For the first time, we have included laser frequency noise, which is strongly suppressed to sub-dominant levels in the TDI combinations. In addition to the total data, the training datasets also contain partial versions of the data, which can be summed to retrieve the total signal: with and without noise, with and without artifacts (including gaps, glitches and non-stationary noise). Orbit and glitch files used as input to the simulator are given, too.

MBHBs in Spritz data.

We have two datasets with merging MBHBs. (i) Dataset with a loud (SNR ~2000) GW signal, lasting for about 31 days. The signal is expected to be detectable a few weeks before the merger and, therefore, is suitable for testing low-latency algorithms. We have added three short loud glitches distributed in the inspiral, late inspiral and near merger parts of the signal. (ii) Dataset with a quiet (SNR ~100) GW signal lasting for one week, with a several-hour-long glitch placed near the merger. Parameters of both MBHBs are available in the training data, as well as the information about glitches The glitches injected in the two MBHB datasets correspond to events detected and fitted during the LISA Pathfinder operations (Phys. Rev. Lett. 120, 061101).

Verification GBs in Spritz.

A 1-year long dataset contains 36 verification binaries, with parameters available in the same data file. We have placed glitches according to a Poisson distribution with a rate of 4 glitches per day, whose model is described in the Spritz documentation.

The LDC working-group members will conduct their own analysis using algorithms of their choice, and invite you to join them (to do so, e-mail us so we can pair you appropriately). Of course, you may organize to work on your own, or with your collaborators. For usage tracking purposes, we request that you set up a login for this website before downloading the datasets (your LDC-1 login will work fine). Please submit your results by October 1st, 2022, using the submission interface and format to be found on this website. Plan to include a description of your methods (or a link to a methods paper) with your submission. We would also greatly appreciate it if you were to share your code (e.g., on GitHub, or on our GitLab). To simplify a bit your life we have made several tutorials which, we hope, you will find useful: Tutorial notebooks link, LISANode simulation model.

While we did our best to check the datasets for correctness, small problems or inconsistencies may have escaped us. The best way to validate the data is to analyze it, so let us know of any problems!